On January 20th, the Chinese AI company DeepSeek released a language model called R1 which sent shockwaves across the global AI world. Accompanying the release were impressive claims regarding the model's development and capability, with the development of a model that is 10% of the supposed cost of OpenAI’s o1 and nearly twice as fast on benchmarks. That’s because, according to the technical report, R1 was less reliant on human labelling and instead was able to use an automated form of training. But what about the data? Where did it come from and how is it used?

The AI Data Transparency Index

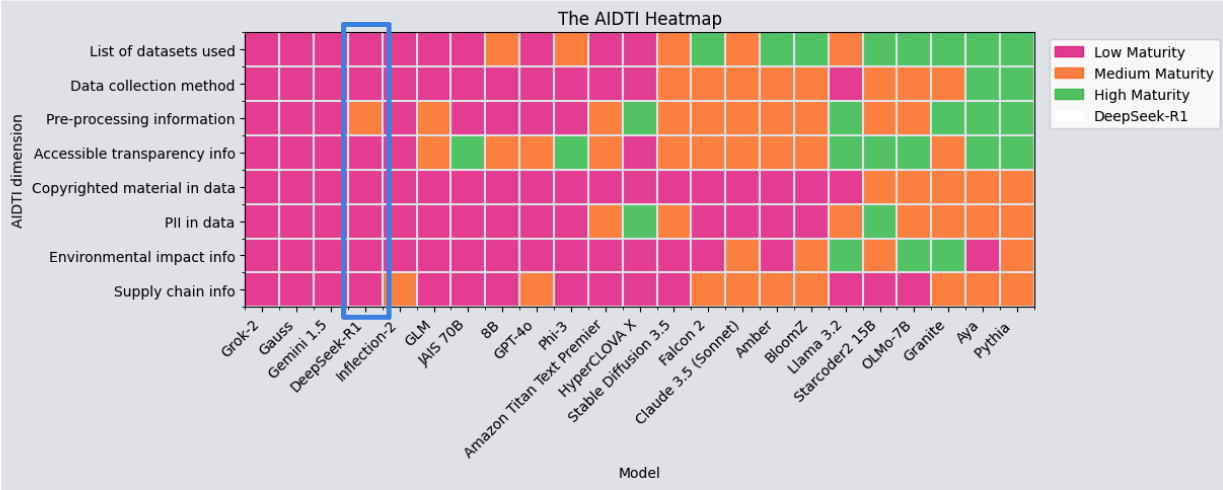

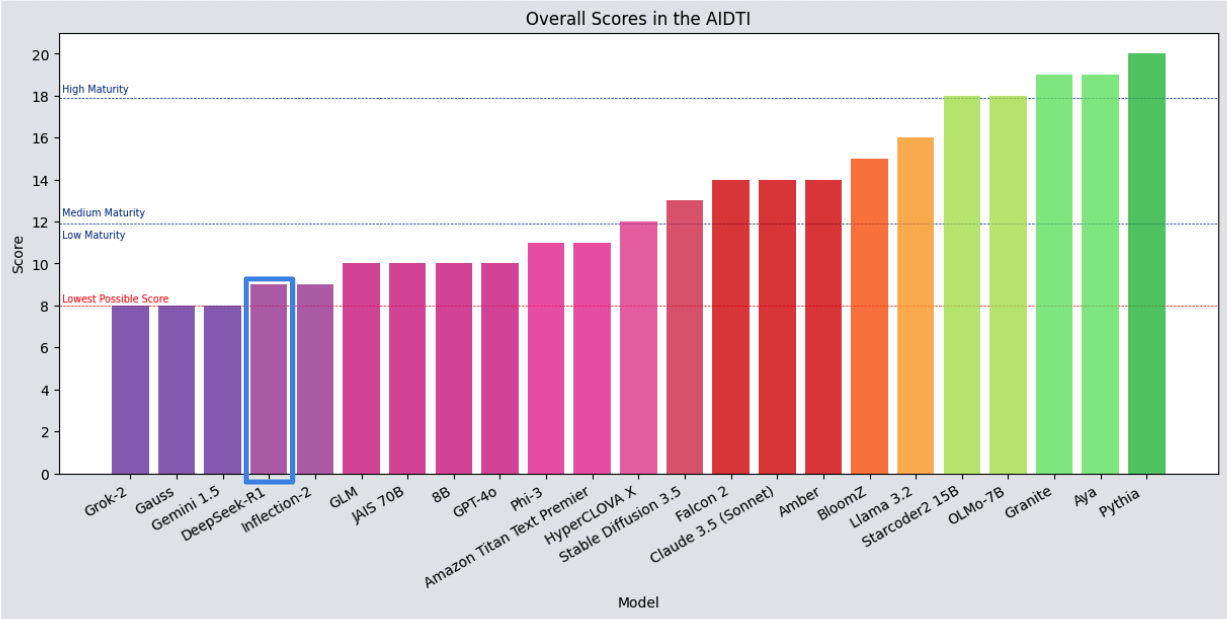

Last month, the ODI launched the AI Data Transparency Index, a new framework to analyse whether model providers were sharing the information needed for meaningful data transparency. With the AIDTI model providers are assessed across seven dimensions, looking for transparency on things such as dataset sources and collection methods, processing activities carried out, and whether the datasets have been checked for copyrighted or personal data.

Through this process, we analysed 22 models from around the world, including models that also, like DeepSeek claimed to be ‘open source’. Our analysis identified that:

- High maturity was demonstrated by five model providers, characterised by detailed, accessible documentation, consistent use of transparency tools, and a proactive approach to explaining decisions made in the development process.

- Six model providers met some transparency criteria but lacked consistency for all dimensions and were therefore considered medium maturity.

- Eleven model providers demonstrated low maturity with limited or poor-quality information, suggesting a general reluctance to be open.

We thought it would be a good opportunity to see how DeepSeek compared to its established competitors.

Two ODI researchers independently repeated our AI Data Transparency Index methodology for DeepSeek R1 and you can see the comparative results below.

Deepseek and data transparency

DeepSeek was unfortunately ranked at low maturity for all but one of our seven transparency dimensions. Due to relatively detailed summaries of the pre-processing and post-training activities that took place in developing DeepSeek R1, DeepSeek was scored at medium maturity for this. For the rest of them, DeepSeek scored poorly. There was no clear list of datasets used in the model and no transparent mechanism used (such as model or data cards) which help to make AI models more transparent and accessible. There were no details shared on whether this data included copyrighted data or personal information, nor any protections for this in place. As was further information on the full data supply chain. Despite some impressive claims as to the models compute efficiency, full environmental costs of the development were not shared.

Overall, DeepSeek’s low data transparency maturity puts it on a par with Inflection-2, moderately better than X’s Grok 2 and Google’s Gemini 1.5, and marginally worse than OpenAI’s GPT-4o. All of these models are still considered low maturity in data transparency, and languish far behind the likes of Cohere’s Aya and EleutherAI’s Pythia. DeepSeek R1 is not an open source model, nor is it a new standard for data transparency.

Being low maturity for data transparency means for different users that:

- We can’t identify whether data was used from their competitors as OpenAI and Microsoft are reportedly probing

- The veracity of DeepSeek’s cost claims, as it was largely bootstrapped from a previous model that they haven’t released the training costs for

- The veracity of DeepSeek’s training efficiency claims, although some calculations suggest there is truth to them

Conclusion

While there are multiple claims to DeepSeek’s ‘open source’ AI model, in reality it is not open source. While both the model weights and the model architecture were shared in a technical paper, neither the code nor the training or evaluation data were shared openly. An analyst for the Open Source Initiative also confirmed that Deepseek is not Open Source AI and doesn’t meet the requirements of the Open Source AI definition. It joins other models which claim to be open source, but score poorly on data transparency.

Ultimately, this means that many of DeepSeek’s impressive claims to highly-efficient model development cannot be validated. For this truly to be a disruptive event, DeepSeek will be required to become much more mature with the data transparency information they share.

Overall, data transparency continues to be a relevant mechanism for judging new models and providers.We need more transparency, not just from DeepSeek because some people are fearful over the sources of the data and privacy risks for users, but from all model providers whether American or Chinese, open or closed, massive or tiny.