Modern technology innovation means difficult choices for regulators, and is also impacting on international discussions about data and technology in trade. Lawrence Kay, ODI Senior Policy Advisor, discusses.

Companies are innovating with more creativity and speed thanks, in part, to the ease with which data can be used and shared in new products and services.

Speed, coupled with greater diversity in innovation creates a problem for regulators, because, although it suggests the possibility of gains in economic growth and social welfare on the one hand, it also raises the prospect of new harms on the other. This can create a sense of uncertainty around what action to take. This problem is exacerbated by the cross-border nature of much modern technology development, drawing domestic regulators into questions of how to engage in international regulatory cooperation (IRC) that encourages trustworthy data sharing, boosts innovation, and raises economic competitiveness.

Agent-based model

The ODI’s playable simulation of data sharing in a simple economy shows some of the difficulties that data regulators face. The model includes different types of companies and consumers, with the consumers providing data to the companies as they use their products; and the companies then being able to share data with each other to make products that better serve the consumers’ needs. There are two ways in which regulators can affect this process in the model: by requiring companies to be more open to sharing data; and by influencing the trust – through what can be thought of as a proxy for regulation – that consumers have in providing data to companies.

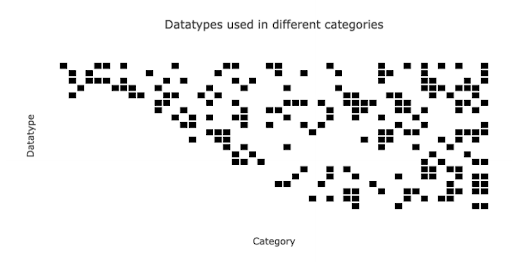

When consumers in the model have good reason to trust companies, and companies are sharing lots of data between them, they cause a rich process of ‘combinatorial innovation’ – different types of innovations working together.

The diagram below illustrates how innovation processes that are more trustworthy and encourage the data sharing lead to more complex products – as with the categories on the right. And as the goods become more complex, they satisfy a much richer range of consumer needs.

The model gives a conceptual grasp of the role of public action in data sharing for modern technology: to create conditions in which data use can become more complex and useful. This objective applies to all data institutions – rules, norms, and organisations – in an economy.

From concept to reality

But policymakers face a considerable problem in going from the conceptual to the actual: the difficulty of predicting the future of data-enabled innovation. It’s hard to know what an innovation will be, and also the nature of its effects. A new technology could be disruptive in the short term, creating big changes in the structure of industries, and work and consumption patterns, that attract lots of public attention; but it could also create many small changes over a long period of time that might have an even bigger impact.

America’s National Bureau of Economic Research (NBER) discussed a range of economic and social effects that could follow from widespread adoption of artificial intelligence (AI), in its The Economics of Artificial Intelligence: An Agenda. Among many papers, Anton Korinek and Joseph Stiglitz described the ways in which AI innovators might capture gains, and AI-induced unemployment could arise; Ajay Agrawal, John McHale and Alex Oettl suggest that AI might greatly enhance the ability of innovators to predict product success, leading to better allocation of research resources; and Paul R Milgrom and Steven Tadelis discuss the role of AI in markets and how this might affect market design. The economist, W Brian Arthur, argued in the Nature of Technology, that general purpose technology like AI was something around which an economy adapts, creating many new jobs and organisations – as with the introduction of the railways – that were not needed before, and hence causing big changes in the demand for skills in the workforce, and health and safety regulation.

The Collingridge dilemma

The problems posed by combinatorial innovation for policymakers have been widely accepted as best defined by the ‘Collingridge dilemma.’ David Collingridge, an academic at the University of Aston in the 1980s, argued that efforts by policymakers to affect the development of technology suffered from a ‘double-bind’ problem: that it’s difficult to predict the effects of a technological change until it has become widely adopted; but that once a technology has become entrenched in business and social practices, changing its effects will have become hard.

In other words, it’s difficult for policymakers to know what to do in response to fast, combinatorial, data-driven innovation and the possible effects identified by the NBER. Is it better to prevent technological change early, based on poorly evidenced expectations and with the risk of inhibiting future benefits, or wait for stronger evidence on the balance between benefits and harms, knowing that one’s options might be narrower in the future? There is, in short, a time asymmetry between innovation and regulation.

‘Permissionless innovation’

‘Permissionless innovation’ has been proposed as an approach for solving the dilemma faced by policymakers. Adam Thierer of the Mercatus Center at George Mason University, argues in Permissionless Innovation and Public Policy: A 10-Point Blueprint, that innovators need to know that regulators accept their risk taking, experimentation, and willingness to challenge existing practices, in order to produce the economic growth of the future. Thierer describes the gains from digital technology produced by US firms in Silicon Valley during the 1990s and 2000s as having been the result of such an attitude and that ten principles should be applied to the development of autonomous vehicles, drones, robotics, and the like, all of which will involve data policy questions. The ten principles include things such as removing barriers to innovation, relying on existing legal systems, using insurance markets, using industry self-regulation, and targeting new legal measures on only the hardest problems.

Anticipatory regulation

A larger body of literature on how to deal with the characteristics of combinatorial innovation is best described best by Nesta as ‘anticipatory regulation.’ Rather than allow technology to develop and then deal with problems, this approach sees a role for regulators in trying to predict the future path of innovation; coordinating a plural set of views in the setting of rules for new technology; using principles and aiming at outcomes rather than establishing targets; and ultimately developing the regulatory framework through a process of ‘coevolution’ with technology. In our blog on anticipatory regulation, we describe some of the ways that have been proposed for implementing it.

Collaborative efforts

Domestic regulators have a range of ways in which they might create the conditions for data use to become more complex and useful, boosting economic competitiveness. But they also need to think about how their choices meet the approaches of other countries, and how this will affect cross-border data sharing.

Given the degree of uncertainty over future technology and the sensitivity of some data that will be used to inform it, countries could benefit from starting collaborative efforts early so that they have developed the mutual understanding and processes that will help them to deal with difficult technological questions, later.

And to do that they have many types of international regulatory cooperation to choose from, including regulatory partnerships, knowledge networks, and informal coordination.

---

If you are interested in exploring any of the topics discussed here, please contact us to arrange a call.

We offer a range of data-related consultancy and advice services, as well as established and bespoke training courses.