In 2019 we were thrilled to work with our partner Co-op and its Data Team on a project about data protection and trust in data

As Co-op is a member-driven organisation, listening to, engaging with and hearing the voices of Co-op Members – particularly on issues relating to building and maintaining trust – is an important part of the work it does.

With this in mind, the work we did was inspired by Co-op Data Team’s 2017 project ‘Being trusted with Data’ which identified, that for Co-op, being trusted with data is built on three things: integrity, transparency and meaningful consent. We felt that it would be interesting to revisit the 2017 project and test Co-op Members’ perceptions about data, particularly following two key data events which took place in 2018.

First, the Facebook/Cambridge Analytica scandal, second, the Data Protection Act 2018 which incorporates the General Data Protection Regulation (GDPR). The GDPR gives people greater rights and responsibilities about how data about them is collected, held, used and shared.

The scandal and the regulation happened within a matter of weeks of each other, leading to persistent front-page news and widespread societal conversation about control, consent, privacy, security, rights and responsibilities regarding the use and abuse of data about us.

Members’ survey

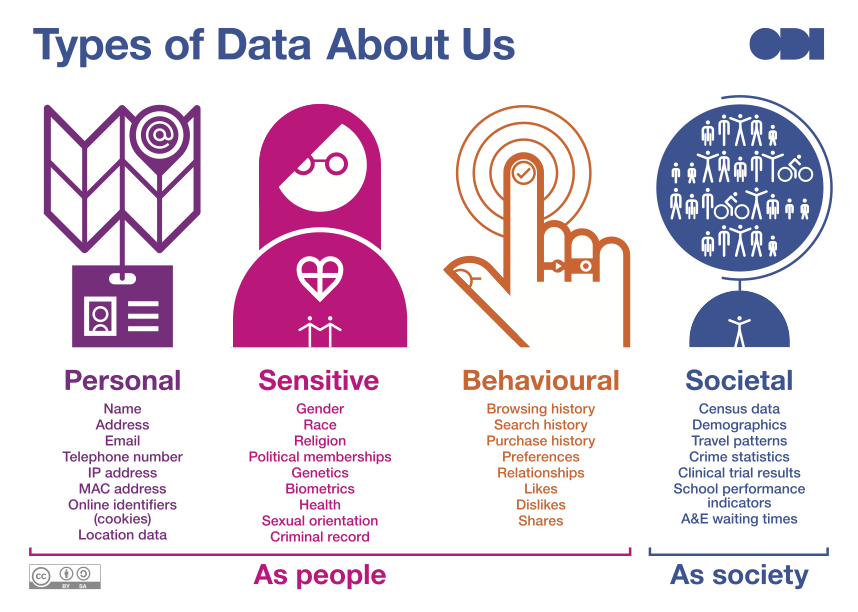

Part of the approach built on work we have been doing with the Royal Society of Arts and Luminate which involved listening to how people understand and talk about data. The following graphic – outlining four types of data about us: personal, sensitive, behavioural and societal – was used as the basis of developing thoughts on whether people are comfortable or uncomfortable sharing particular types of data about them.

We also decided to re-run Co-op’s 2017 survey to look at Co-op Members’ current perceptions, but with additional questions to explore awareness and comfort about tracking of data, cookies and the potential for data collected by Co-op to be used for societal good.

The survey, launched in September 2019 received a fantastic response, engaging over 500 Co-op Members.

The survey revealed that there is a good knowledge of data protection among Members. A large majority (88%) told us they were aware of GDPR and half (50%) were aware of their rights under the GDPR.

With this level of awareness about data protection and the role it plays in our data lives, it was reassuring for Co-op to learn that just over two thirds (69%) of their members trust them to keep data about them safe and secure.

Again 88% of members said they understood that their web browsing history was used for marketing purposes through the use of cookies, despite the fact that 58% told us that they never read the cookie information provided by Co-op and 45% told us they don’t read the Co-op privacy policy.

Of particular interest to us was learning that just over half (56%) of people check for secure symbols or assurance marks on a website before submitting data about themselves.

Nuance and context

While quantitative data can provide an interesting insight into people’s reactions to specific questions, we have found that when it comes to thinking about how data about us is used, nuance and context are critical factors.

With this in mind, we followed up the survey with a workshop in Manchester in November 2019, attended by Co-op Members, some of whom were also Co-op staff.

We asked our workshop participants a range of questions about the approach they take to managing their safety, security and privacy online. We sought to gain insights into their awareness and understanding of cookies, privacy policies, web browsing and tracking, and their thoughts on how data about them is collected and used online and offline.

Finally we asked them to help us see what good practice would look like for how data about us is used, and what opportunities there might be going forward for Co-op and other similar organisations to take to build, and maintain, consumer trust.

What we learned

The conversations we had were illuminating. The workshop participants demonstrated a range of unique approaches to managing their online lives. Whether about trust, privacy or security, we learned that the decisions they make are contextual and depend very much on where they are, what they are doing, what device they are using and what they are searching for. People use a range of approaches to determine trust and security about online services.

Participants demonstrated a reasonable understanding of how cookies work and how adverts and benefits are served to us. They made clear that they understood that their preferences and behaviours were integral to the process. The level of comfort about this was mixed. They expressed a reluctant acceptance that being shown adverts was just part of the way things work now, though this was not shared by all.

This sense of resignation appeared to go hand in hand with a demonstrated complacency expressed by some towards their individual engagement with cookie opt-outs and controlling access to personal data. Even those who said they didn’t want to share data didn’t describe taking active decisions to prevent access – other than one person saying they use an ad blocker.

We tested what process might help people engage more with cookies; namely whether a lengthy cookie policy or a pop-up policy with options to opt in would be preferable. Despite finding pop-ups irritating, the consensus was that a pop-up was a clearer approach and provided more accessible and immediate information.

Participants raised concerns about the lack of detail about what people are actually consenting to , and about accessing and sharing personal data about us with third parties,particularly with social media companies.

We found that participants raised serious concerns when they learned that automated decision making using scoring of people can be used to determine what offers or services an organisation might offer to individuals. Participants wanted much more detail about what scoring meant, what it consisted of and how they could dispute decisions.

Unsurprisingly we heard that terms and conditions and privacy policies are still mostly ignored. They were seen to be meaningless as they offered no real choice or control for the user. We were told that the documents protect the companies rather than the person, and that reading them “doesn’t make any difference, if you want to use their service you have to accept them no matter what”.

On a positive note, participants expressed support for organisations publishing data which could help society make better decisions, for example data gathered through insurance services, so long as the organisation providing the data didn’t financially benefit and that the people the data may related to couldn’t be easily re-identified.

Five key recommendations

Based on what we learned we have five key recommendations that organisations such as and similar to Co-op should consider to ensure that the trust consumers feel towards these organisations is honoured.

- Be transparent. Whether it is purpose, length of retention or being clear on who data is shared with or accessed from and why, organisations should ensure they are as transparent and honest as possible to ensure an open and trusted relationship is maintained.

- Review cookie policies and consider if they meet user expectations and GDPR requirements. Many organisations will have done this in the run up to the GDPR in 2018, but if it’s still on the to do list, make it a priority.

- Review sharing and use of data about users and customers with social media companies. If you rely on accessing data about your customers or users from their social media profiles you may wish to consider whether this is really necessary. We would recommend undertaking a review based on asking questions posed in our Data Ethics Canvas.

- Consider the use of automated decision making about consumers and users. Consider whether scoring users and making automated decisions using algorithms is really an essential service and consider whether greater transparency about the process is needed.

- Openly publish data which could be used to help society make better decisions, but ensure the data is non-personal, anonymised and isn’t shared to make money for the organisation.

Next steps: ideas for further exploration

Areas for consideration and further exploration:

- Running a similar workshop with consumers/members/users to learn their thoughts on what your organisation is doing with data - be it societal or personal data.

- Running a user workshop to explore and test your privacy and cookie policies, your terms and conditions and the language you use to describe and explain your organisation’s use of data with consumers/members/users.

- Publishing details of the use of scoring and automated decision making, including what data is used and how scores are applied. Engaging honestly and clearly with your consumers/members/users will help to understand their concerns and help you consider how to develop trust about how your organisation is using algorithms.

- Work in the open, do regular surveys, ask, listen and engage.

Get in contact

The ODI has a range of tools and guidance that may assist you with these next steps. Feel free to get in touch and have a chat with us.

Find out about the ODI’s work with retail organisations on issues of data protection, consumer trust and data portability.