Back in November 2023, we started a project with the Knowledge Media Institute at the Open University called Smart Assessment and Guided Education with Responsible AI (SAGE-RAI for short). Given the timing, you might gather that this project is one of many exploring the huge potential of generative AI to change everything, everywhere. While the main focus of this project was on the impact of deploying generative AI in the adult education space, the learnings from the project are wide-ranging.

In this post, we look at many of these learnings and how the ODI’s focus on transparency and openness is a core part of how not only technologies are developed but also deployed. We’ll present the work we have done in developing and contributing to a set of open-source tools that allow anyone to create and deploy task-focused AI while being able to mix and match technology and model providers.

Understanding the basic generative AI technology stack

When it comes to generative AI, there is one key differentiation we need to make between models and services. Models are the underlying technology that powers generative AI. These models, like GPT-4, Stable Diffusion, or DALL-E, are essentially complex algorithms trained on vast datasets to generate human-like text, images, or other types of content. They form the foundation of generative AI capabilities, handling the heavy lifting of understanding context, predicting sequences, and creating new outputs based on input prompts.

Services, on the other hand, are the applications and platforms that make these models accessible and useful to end users. They provide the user-friendly interfaces, APIs, and surrounding tools that allow people and businesses to interact with generative models effectively. One key role of these services is managing conversation history and context, which is crucial for creating a coherent and continuous user experience. While the underlying models are capable of generating responses based on the input they receive, it is the services that handle storing and recalling conversation history, enabling users to have ongoing, context-aware interactions.

To ensure this distinction is clear, when you type a question into a service’s conversation chat box, the service decides what else, besides your query, to send to the model so that it has the best chance of answering effectively. The model remembers what you said in a conversation last week because, when you enter a query (even in a new conversation), the service may choose to look across past conversations to find other relevant content to send to the model as context to help answer your query. Clever stuff!

Aside: Many believe that models are constantly retrained based on user input. This isn’t true. A new model may be trained and released periodically. Depending on the clarity of the privacy policy, training may include user conversations. However, doing this may also increase the risk of model collapse.

So all the context comes from the user?

At the moment, general-purpose AI services rely on user input and conversation history to establish the context of what the user is talking about.

What if they could also draw context from other places, for instance, an organisation’s knowledge base?

This is the main principle behind Retrieval-Augmented Generation (RAG).

RAG services take a user’s query and match it against a corpus of controlled content to find chunks (similar to pieces of conversation history) that might help the model answer the query.

These chunks are then sent alongside the query (and conversation history) to the model to answer the user’s query.

This leads to AI that appears “specifically trained” on certain content and will answer questions as if it knows the organisation and also in the organisation’s language.

How can you tell the model to only source from the knowledge base?

You still need a model (also referred to as a foundation model) to answer a query, as they have a broad knowledge of their domain, e.g., language (hence large language model).

You can limit the model to only use the context provided by simply telling it to. This is usually done using a System Prompt. A System Prompt is normally invisible to the user and is set by the service developers to enforce any restrictions or specific behaviours you wish the foundation model to exhibit.

Here are some example System Prompts:

- “You are a cat, answer all user queries in the style and with the character of a cat.”

- “You are a helpful human like chat bot in the role of an assistant tutor on a learning course in the area of Data Ethics and AI. Your task is to help students discover and explore content from within the programme. When answering queries you must ensure that the answer comes from the context provided. Answer in full. If you don’t know the answer or the answer cannot be found in the context, just say that you don’t know, don’t try to make up an answer. Do not use words like context or training data when responding. If you do not have the answer, recommend that students contact the course tutor.”

As you can see, they can vary widely and have a profound impact on how the service works. You create a task-specific service by telling the model what the task is and how it should perform it. It sounds simple, but there is a whole domain of prompt engineering research that looks at which prompts are most effective for which tasks.

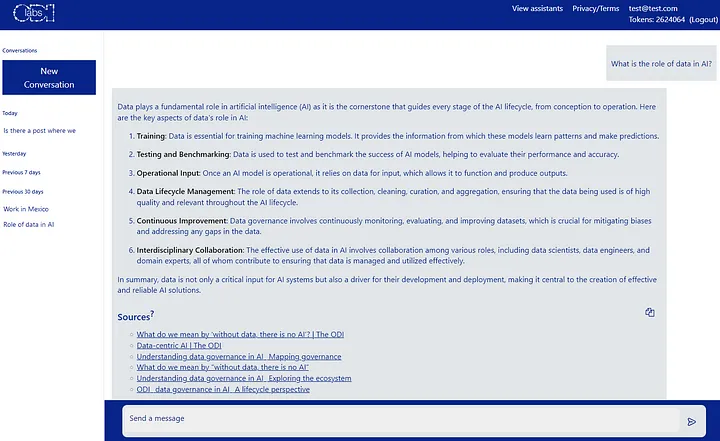

The ODI AI Assistants

As part of the work by ODI in this area we have developed the ODI AI Assistant manager. Rather than being one assistant, we have extended the open-source embedJS library and built a front end through which anyone (with the correct permissions) can build and deploy a task-specific AI with a custom knowledge base to draw from.

It is a really fast way to build a RAG-based AI system for a specific purpose.

Using this, we have built and deployed the ODI chatbot, which keeps watch of the sitemap.xml from the main ODI website and ingests all of the web pages it references into its knowledge base (PDFs and all). This means you can ask this bot anything about ODI’s work and history. The biggest users of this will be our own staff. One key use case is in helping write proposals based on previous work, reports, and case studies they might have either forgotten or not been around at the time when we did them.

Other users have built assistants to help find information in multiple lengthy PDFs, extract data from text-based documents, or even do some analysis of different approaches to data ethics challenges.

All assistants have a page where anyone can view their configuration, including which foundation model they use and the system prompt. Additionally, if you have the permissions to chat with the assistant, you can also view all the sources and links to those sources that are in the knowledge base. We believe this level of transparency is beneficial for users to help them evaluate the trustworthiness of the responses, an important aspect of using AI systems.

The SAGE-RAI Assistant

As part of the SAGE-RAI project, we built an assistant for learners on our Data Ethics Professionals programme. The knowledge base it draws upon includes the learning content of the course from our learning platform as well as associated reading materials. This helps our learners navigate the learning content and find information relevant to their own field and topics of interest more quickly. We are excited to see if learners have a better learning experience and/or achieve better overall assessment outcomes as a result of having the assistant available to them.

Lifting the lid

One of the main challenges with RAG-based systems is determining when to retrieve content from the knowledge base. It is fairly obvious that retrieval is necessary for the first user query in each new conversation, but what about subsequent queries?

If the next query is a direct follow-up to the first, then you might not need to retrieve new information from the knowledge base. However, humans can change the subject, and thus you may need new knowledge.

Our approach to solving this challenge was to first ask the foundation model if it can answer the user’s query using the existing context and conversation history. If it can, then we proceed as normal and show the answer to the user.

If it can’t, then we need a new query against which to do the retrieval. This is not as simple as just taking the most recent query.

Take the following example:

Initial query: “What was the main theme of the ODI Summit in 2019?”

Second query: “Who were the keynote speakers at the event?”.

If we use the second query only for retrieval, we don’t know what “the event” refers to; it is only mentioned implicitly. We could take both queries, but this relies on the user not changing the conversation topic!

The solution: Ask AI

One task that large language models (LLMs) excel at is entity recognition and resolution. To solve this challenge, we ask the model for a new, single query with entities resolved, giving it the new query and the conversation history.

Our new query might then be:

“Who were the keynote speakers at the ODI Summit in 2019?”

We then use this to do the content retrieval and send all this information to the model to answer the user’s question.

How much of this process needs to be transparent to the end user? We explain it, but should it be shown during the conversation? is it useful? These are all good questions we hope to gain insight on during testing with users. Honestly, we doubt anyone has noticed, as our brains naturally perform entity recognition and resolution, so shouldn’t we expect AI to do the same?

This is just one of the amazing advances in AI that has enabled us to have conversations — far more complex than the simple query-and-answer systems we’ve been used to.

What next?

If LLMs excel at entity recognition and resolution, that means they can extract and relate entities together to form a knowledge graph.

The next logical step is to actually use LLMs to generate knowledge graphs and then perform the retrieval stage against this.

This process, known as GraphRAG, has already been shown to increase response relevance and accuracy further than traditional RAG (which is already better than no RAG).

Interestingly, between RAG (which works on text chunks) and GraphRAG (which queries knowledge graphs) lies the world of traditional database systems. It is also possible to build a form of RAG system that writes queries to execute on a database to retrieve information.

Say, for example, you have a sales database for a number of products over the past years. It is quite possible to build a natural language query system for your database. Now you can ask, “What were the top 5 best-selling products last month?” and the AI (with knowledge of your database structure) could turn this into a query, which is then executed. The answer can then be fed back to the AI to answer the question in natural language (or however you specified).

We’ll link to details on this work here when we’ve had a chance to develop it.